Understanding LSTM(Long Short Term Memory)

Due to the vanishing gradient problem in RNN for long sequence, where some information is lost in each time step increasing the sequence length, we need to use a technique called Long Short Term Memory(LSTM).

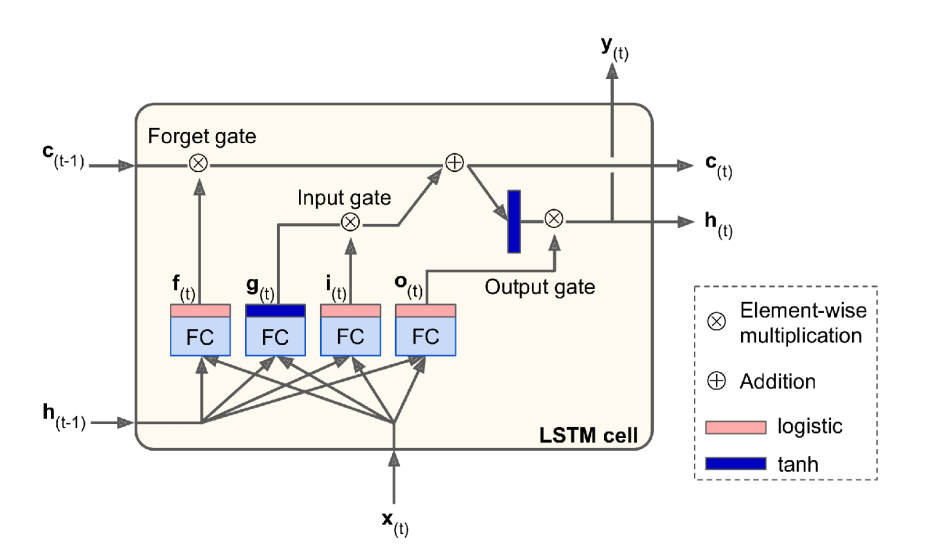

LSTM contains three gate:

- Forget gate

- Input gate

- Output gate

Forget gate controls(by f(t)) which parts of the long-term state should be erased.

Input gate controls(by i(t)) which parts of the long-term state should be added

Output gate controls(by o(t)) which parts of the long-term state should be read and output at this time step.

LSTM is exact as RNN but it’s state is split in two vectors: h(t) and c(t) where h(t) is short-term state and c(t) is long-term state.

As long-term state c(t-1) traverses the netwrok from left to right. It first goes through forget gate, dropping some memories, and then it goes through input gate, adding new memories. The result c(t) goest straight out.

The long-term state c(t) copied and pass through tanh function and result filtered by output gate.

The input vector x(t) and prevous short-term state h(t-1) are fed to four different fully connected layers.

Here’s all the equations for every gates:

)

Comments