Knowledge Distilation

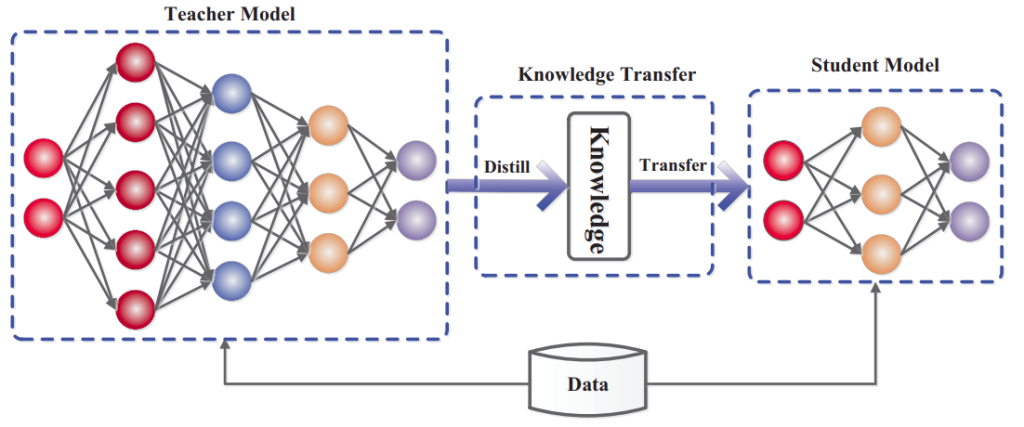

Knowledge distillation is the process of transfering knowledge from a large model to a smaller model. Smaller model are necessary for less powerful hardware like mobile, edge devices. There are two parts of knowledge distilation.

- Teacher model(large model)

- Student model(distil model)

Knowledge Distillation are three types:

- Response based distillation

- Feature based distillation

- Relational based distillation

For more information check out the resources below:

Papers

- Distilling the Knowledge in a Neural Network by Hinton et al.

- Knowledge Distillation: A Survey by Gou et al.

- KD-Lib: A PyTorch library for Knowledge Distillation, Pruning and Quantization by Shah et al.

Blog

- A beginer guid to knowledge distillation

- Knowledge Distillation by Jose Horas

- Knowledge Distillation with pytorch